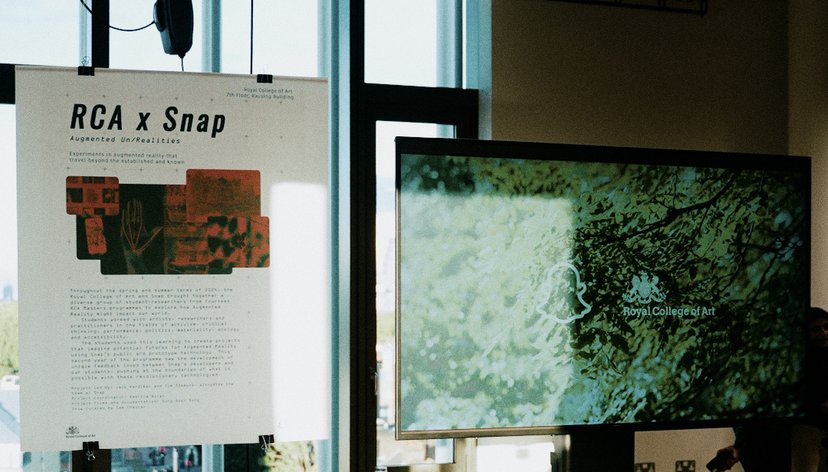

A year-long programme bringing Royal College of Art students together with leading artists, researchers and XR professionals to explore the potential uses and implications of Augmented Reality technologies with Snap Inc.

When you have to choose between truth or legend, print the legend. In the legend of L'arrivée d'un train en gare de La Ciotat, audiences at the Lumières Brothers’ first public film screening ran from a train moving towards them on screen. The only problem? This did not happen. Audiences did not run – in fact, the film was not even shown that day. Nonetheless, this enduring image from 1895 is still used today to describe the immersive power of film. The closest we get to this mythical effect today is with technologies like Virtual and Augmented Reality. When experiencing these new, imagined realities, the audience member ducks and dives, trying to touch or avoid virtual objects that their brain tells them are physically there.

Throughout the spring and summer terms of 2023, the RCA and Snap Inc brought together a diverse group of student-researchers from twelve RCA Master’s programmes to explore how Augmented Reality might impact our world. Led by Jack Hardiker, Tom Simmons and Dr Stacey Long-Genovese, students worked with artists, researchers and practitioners in the fields of social activism, critical thinking, performance, politics, ecology, accessibility and technology. The students used this learning to create eight projects that imagine inclusive futures for Augmented Reality using Snapchat’s software, Lens Studio and unreleased wearable Augmented Reality glasses ‘Spectacles’. The programme returned in 2024 with a larger cohort and an even wider range of creative disciplines represented.

Films produced by Sung Hoon Song with programme participants and staff.

Key details

Gallery

Projects

Gateway to Reality

Gateway to Reality invites individuals of all ages to delve into Aristotle’s philosophical framework of the five senses. Drawing inspiration from Aristotle’s seminal work, De Anima, composed between 384-322 B.C., our Augmented Reality experience unravels the interconnectedness of the senses and the four elemental forces: fire, air, water and earth. By challenging Aristotle's ideas through hearing, taste, smell, touch, and sound, participants are invited to engage with a contemporary understanding of senses, reality and self-perception.

Wearing Snap’s Spectacles, participants encounter four tactile containers, each representing an element. Augmented Reality blurs traditional notions of perception through sense hacking methods pioneered by Charles Spence. Inspired by Aristotle's Peripatetic School, renowned for its emphasis on movement and knowledge sharing, the portable nature of these containers encapsulates this ethos.

Gateway to Reality encourages spatial engagement via movement due to a pivotal breakthrough in the late 1870s with Robert Bárány's discovery of the vestibular system, adding a 6th sense further challenging Aristotle’s concept of five senses. Through Augmented Reality and sense hacking methods, Gateway to Reality is a reintroduction to senses as they were once known.

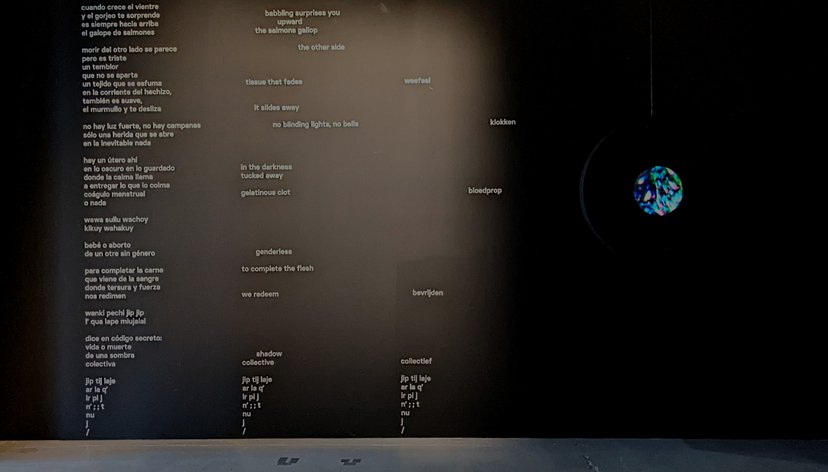

Oscillating Cultures

“Oscillating Cultures" is a multi-device AR artwork that critiques cultural consumption, perception, and the pervasive role of AI in generating simulated images.

Within the artwork, three phones are set to interact with each other, with each phone’s interface influencing the others. Tapping actions generate face filters based on cultural choices, initially taking on the appearance of a commercial advertisement. As choices narrow, corresponding face filters are reflected on the other phones as stereotypes or classified depictions. This intentional use of discomfort aims to parallel modern internet recommendation algorithms, presenting them in an arbitrary and absurd dadaistic manner.

The entire interaction highlights human tendencies to classify cultures and the issues that are inherent in button-driven interfaces of contemporary internet culture. Linked to AI training, user interactions, stereotypes, and biases, “Oscillating Cultures” underscores how both humans and AI often fail to perceive individuals from different cultures as unique entities, opting instead to impose stereotypical imagery onto them.

Throughout the rhythmic oscillation within the collage of faces, underrepresented cultures are blended and juxtaposed, offering a reflection on the blending of cultures and inducing the verfremdungseffekt (Bertolt Brecht’s distancing effect). The artwork ultimately prompts contemplation on the endless classification cycle in machine learning, calling viewers to consider the harmful impact of classification and AI within their current environments.

Rooted

Breathe to connect with the natural world in this Augmented Reality experience.

The act of breathing is both essential to human life and part of a cycle that feeds plant and tree growth. As we breathe out, plants and trees absorb the carbon dioxide we emit and produce the oxygen we need to survive. This symbiotic relationship allows us to connect with the natural world in a set of actions that are familiar to both humans and plants.

We have used Snap’s Spectacles to engage the human senses and increase connection and intimacy with the natural world. Drawing from the breathing practice of Pranayama, our project draws attention to the life energy that is contained in our bodies and the control we have over how we release our energy into the world. The tessellated eight-pointed stars draw from the “Breath of the Compassionate”, an Islamic geometric pattern symbolising symmetry, harmony and unity. With the Spectacles, follow the sounds of affirmation, surrounded by tessellations that inhale and exhale with you.

Additionally, we invite participants from anywhere in the world to join us in a workshop where we will collectively breathe with the intention of nurturing our relationships with the nature around us.

Child‘s Play

“The ability to retain a child’s view of the world, at the same time with a mature understanding of what it means to retain it, is extremely rare” - Mortimer J. Adler

Our Project harnesses the potential of ‘child's play’ to tackle the elusive creative block that often hinders innovative expression. We have combined machine learning with Lens Studio by implementing interactive mechanics. This includes customising a hand tracking template to accurately detect when a user's hand interacts with a specific object (a real object in this case). Additionally, augmented reality is used to modify the appearance and behaviour of surfaces, effectively converting physical touch into digital interactions to make a user's experience more immersive and responsive.

Child’s Play is a “peek-a-boo moment” that gently nudges users to reconnect with their inner

child, unpacking a ‘creative mindset’ long nestled, yet perhaps overlooked, within their memories. With AR as our canvas, our vision was simple: dismantle what’s in your thoughts, wrap it with a filter, all while turning these intangible, abstract ideas into vivid, experiential stories you can see, touch, feel and tweak.

About Us

About Us is a project that is centred around creating a unique alternative meeting

space with and for disabled people, in a post-digital world. Our AR prototype Lens is the entry point for a community-led space that addresses accessibility issues within the wider Snap community.

We are 'About Us’, a new collective that responds to the rapid technological developments in our current society, by leading conversations and fostering a communal space with and for disabled people.

With diverse backgrounds and disciplines, each of us plays a role in researching and developing accessibility tools for future technologies. In particular, we focus on Augmented Reality. We believe that AR spaces can be more accessible than Virtual Reality alternatives, as users do not need access to expensive VR headsets to participate in extended realities and immersive experiences.

We discovered that the accessibility features within Snapchat are rather limited. This observation extends to Snap lenses, including those intended for upcoming Spectacles.

Observing the well-known disability rights slogan “nothing about us, without us," we

are committed to creating a space within the Snap community, led by and for disabled people. This space invites people to contribute to the discourse of technology accessibility, via our website forum and real-time, augmented gatherings, facilitated by our prototype “ABOUT US” lens and Snap’s Spectacles. While the experience is crafted with these platforms in mind, it is equally accessible through an iOS/Android Snapchat app.

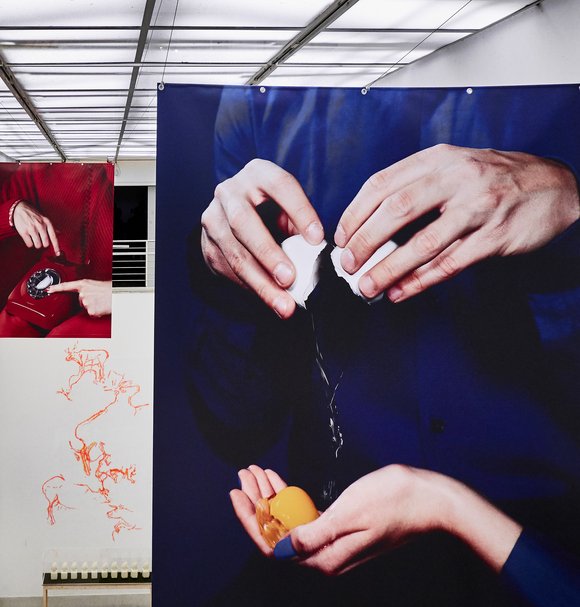

Neo Nature

Our group aims to present a new ecology of the body and identity as a hybrid natural landscape through a queer perspective by providing a series of ecologically-sensitive fluid AR sculptures together with mixed-media sculptures made by 3d printed material and moss.

We created a series of ecologically-sensitive fluid AR and mixed-media sculptures that hybridise natural ecology and the body, presenting a new ecology of the body as a natural landscape. Here, the soul, invisible to the naked eye, is a new substance.

In the Tao Te Ching, "Xuan PIN" refers to the dark valley of the stream, and also to the vagina. It represents a kind of Tao that gives birth to all things in heaven and earth. The new identity represented by the fluid state of nature and human intermingling coincides with the concept of queerness.

Queerness is not only related to gender, it is also philosophical; queerness represents a flowing state. We incorporate queer ecology into environmental studies to reimagine the relationship between human and nature, where gender diversity and ecological diversity are two sides of the same coin. We do so by creating hybrid organisms and diverse ecologies that break down bodily boundaries and celebrate the richness of different identities and life forms.

The body, identity and nature, ecology, digitisation and the close connection between different species, living and nonliving (technology), are fields that interact and interpenetrate with one another to shape a new ecology of the body and identity.

Mindscape

We are making journaling a mindful, spatial experience through the use of augmented clouds, controlled by speech and gestures.

Approaching the act of journaling through the lens of mindfulness, Mindscape invites users to create a personalised headspace through speech whilst wearing Snap’s Spectacles. The traditional practice of journaling through writing is augmented to make the experience interactive.

Whilst the user is wearing the Spectacles, they are encouraged to engage in a stream of consciousness through speaking. The speech is captured by the Spectacles and converted into a visual cloud which may then be controlled and manipulated using hand gestures.

This unique visual cloud, as crafted by the user, can then be captured and saved to their smartphone as a memory of that specific point in time, allowing further reflection and archiving.

ViewAir

The ViewAir AR Lens makes visible the growing problem of air pollution and heightens awareness of geographical areas of concern via the use of real-time site-specific data.

More than one in nineteen deaths in Britain’s largest towns and cities are linked to air pollution – with people living in urban areas in south-east England more likely to die from exposure to toxic air. London, Slough, Chatham, Luton, and Portsmouth had the highest proportion of deaths attributable to pollution, one study found, with around one in sixteen deaths in 2017 caused by high levels of harmful particulates in the atmosphere. (Guardian 2020)

In the natural world lichens are sensitive to pollution and helpful as indicators of the quality of the surrounding air. By using lichen as a signaller in our AR experience, users will become aware of the real-time presence of pollutants in the atmosphere in their location, through changes in the lichen’s colour, shape and sound. For example, in good air quality the user will observe a healthy lichen, but in air with higher levels of pollutants, the lichen will appear listless.

Importantly, this tool for users of Snap Spectacles and other mobile devices, is not intended to enable people to completely avoid problem areas, but rather should be seen as a catalyst for heightening awareness and empowering action towards cleaner spaces for all.